Multi-channel sensor simulation for autonomous control systems.

Forrest Nelson Iandola; Donald Benton MacMillen; Anting Shen; Harsimran Singh Sidhu; Daniel Paden Tomasello; Rohan Nandkumar Phadte; and Paras Jagdish Jain.

October 26 2021.

US Patent 11,157,014

link

bibtex

@misc{iandola2021multi,

title = {Multi-channel sensor simulation for autonomous control systems},

author = {Iandola, Forrest Nelson and MacMillen, Donald Benton and Shen, Anting and Sidhu, Harsimran Singh and Tomasello, Daniel Paden and Phadte, Rohan Nandkumar and Jain, Paras Jagdish},

year = {2021},

month = oct # {~26},

note = {US Patent 11,157,014}

}

Systems and methods for training machine models with augmented data.

Matthew John Cooper; Paras Jagdish Jain; and Harsimran Singh Sidhu.

December 21 2021.

US Patent 11,205,093

link

bibtex

@misc{cooper2021systems,

title = {Systems and methods for training machine models with augmented data},

author = {Cooper, Matthew John and Jain, Paras Jagdish and Sidhu, Harsimran Singh},

year = {2021},

month = dec # {~21},

note = {US Patent 11,205,093}

}

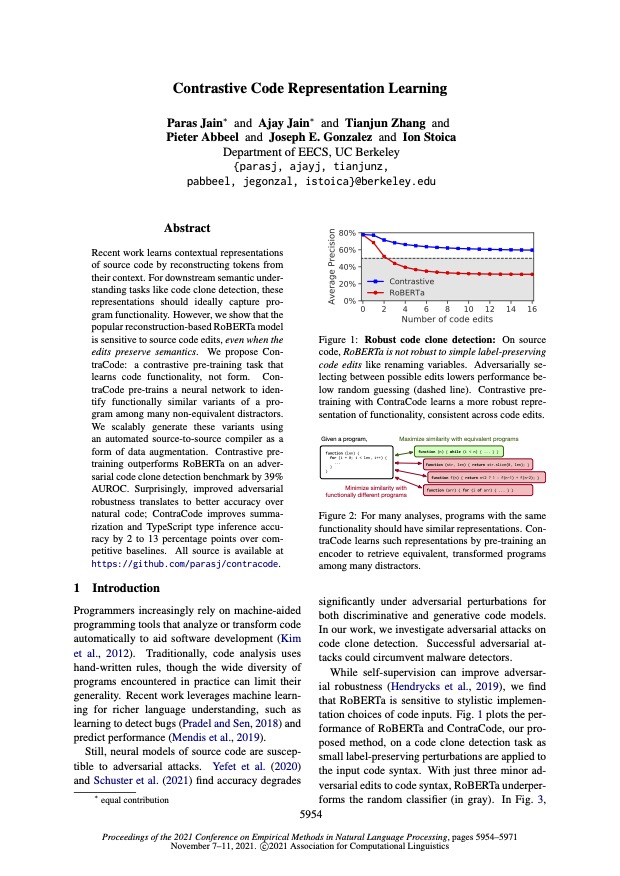

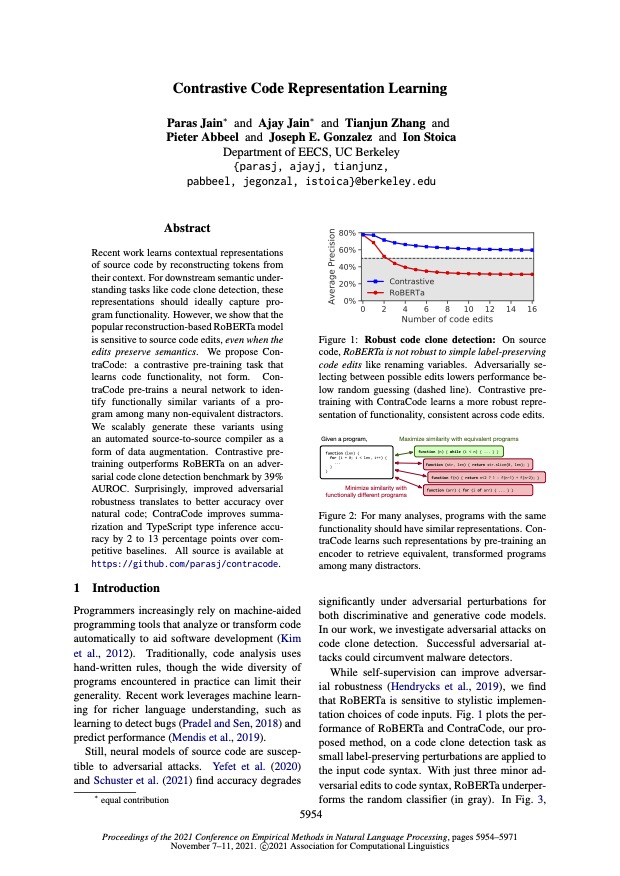

Contrastive Code Representation Learning.

Paras Jain; Ajay Jain; Tianjun Zhang; Pieter Abbeel; Joseph E Gonzalez; and Ion Stoica.

EMNLP 2021. 2021.

link

bibtex

@article{jain2021contrastive,

title = {Contrastive Code Representation Learning},

author = {Jain, Paras and Jain, Ajay and Zhang, Tianjun and Abbeel, Pieter and Gonzalez, Joseph E and Stoica, Ion},

journal = {EMNLP 2021},

year = {2021}

}

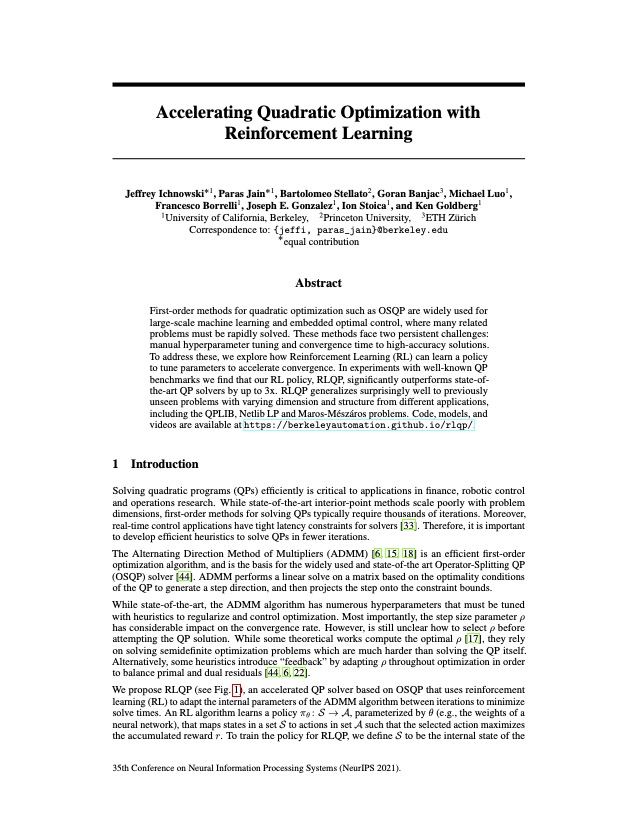

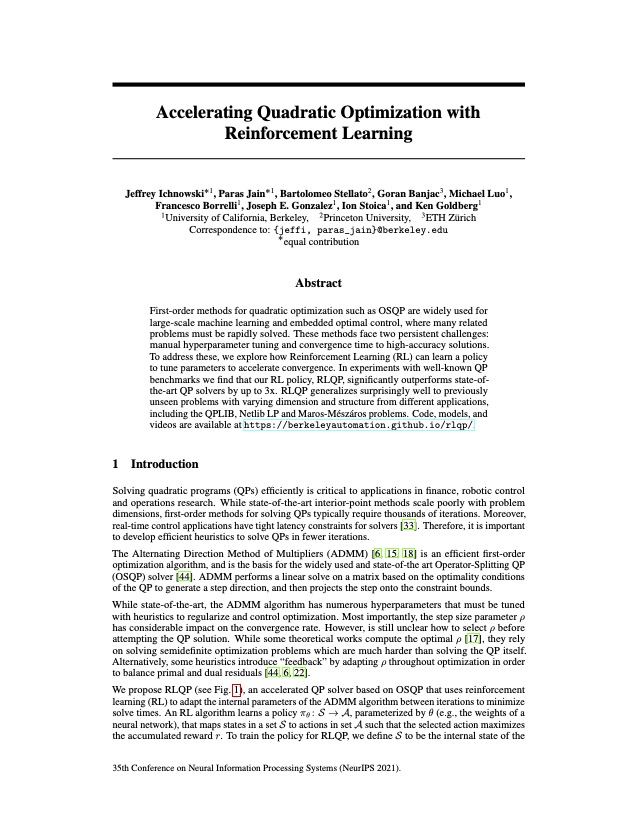

Accelerating Quadratic Optimization with Reinforcement Learning.

Jeffrey Ichnowski; Paras Jain; Bartolomeo Stellato; Goran Banjac; Michael Luo; Francesco Borrelli; Joseph E Gonzalez; Ion Stoica; and Ken Goldberg.

NeurIPS 2021. 2021.

link

bibtex

@article{ichnowski2021accelerating,

title = {Accelerating Quadratic Optimization with Reinforcement Learning},

author = {Ichnowski, Jeffrey and Jain, Paras and Stellato, Bartolomeo and Banjac, Goran and Luo, Michael and Borrelli, Francesco and Gonzalez, Joseph E and Stoica, Ion and Goldberg, Ken},

journal = {NeurIPS 2021},

year = {2021}

}

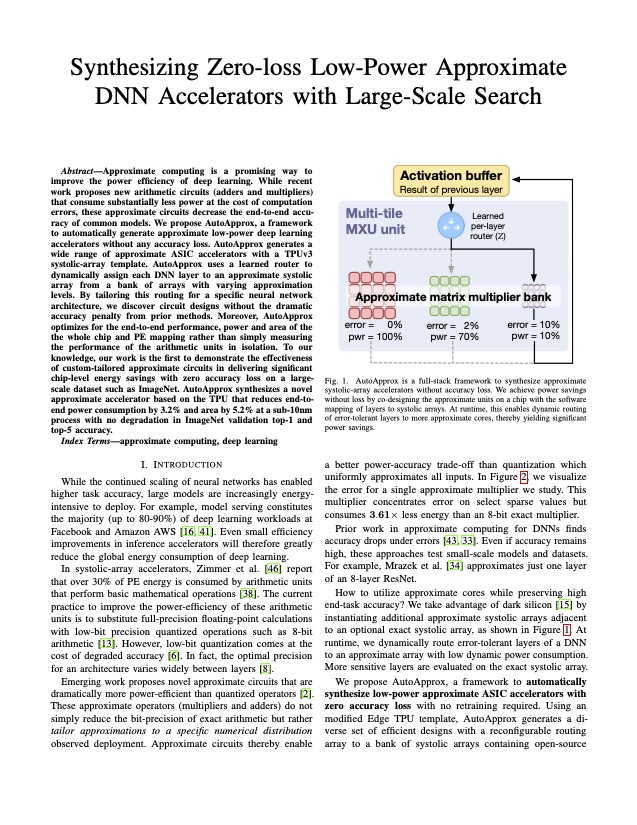

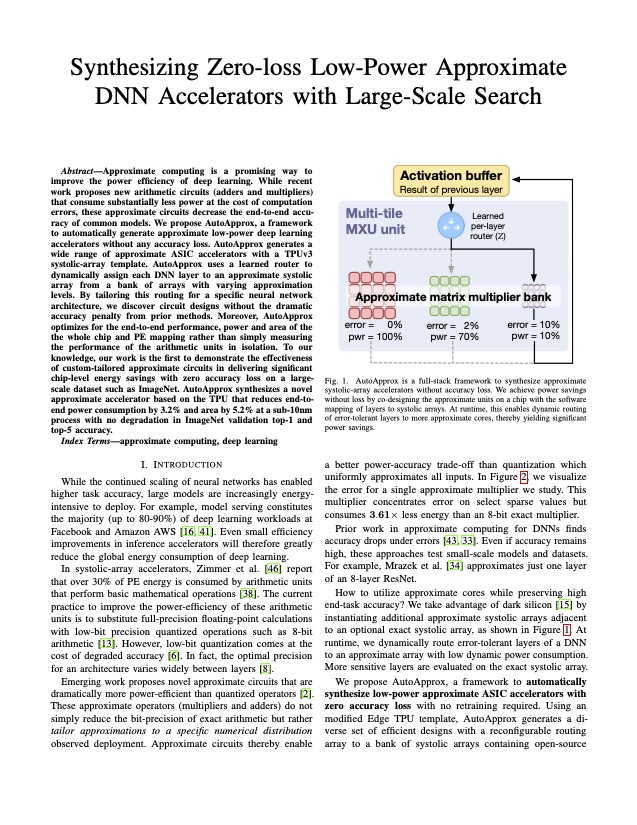

Synthesizing Low-Power Approximate Hardware with Large-Scale Search.

Paras Jain; Safeen Huda; Martin Maas; Joseph Gonzalez; Ion Stoica; and Azalia Mirhoseini.

In

MLArchSys at the International Symposium on Computer Architecture 2021, 2021.

link

bibtex

@inproceedings{jain2021synthesizing,

title = {Synthesizing Low-Power Approximate Hardware with Large-Scale Search},

author = {Jain, Paras and Huda, Safeen and Maas, Martin and Gonzalez, Joseph and Stoica, Ion and Mirhoseini, Azalia},

booktitle = {MLArchSys at the International Symposium on Computer Architecture 2021},

year = {2021}

}

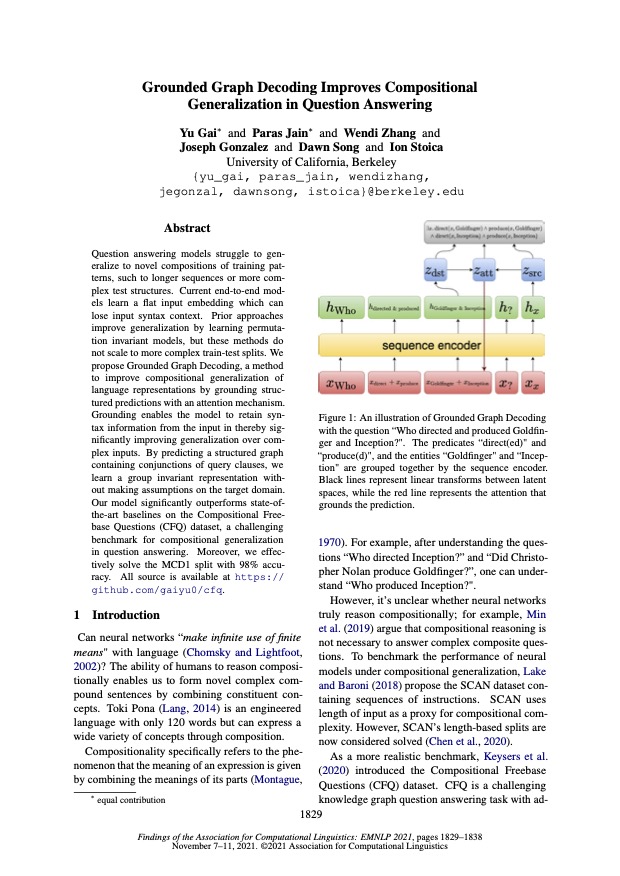

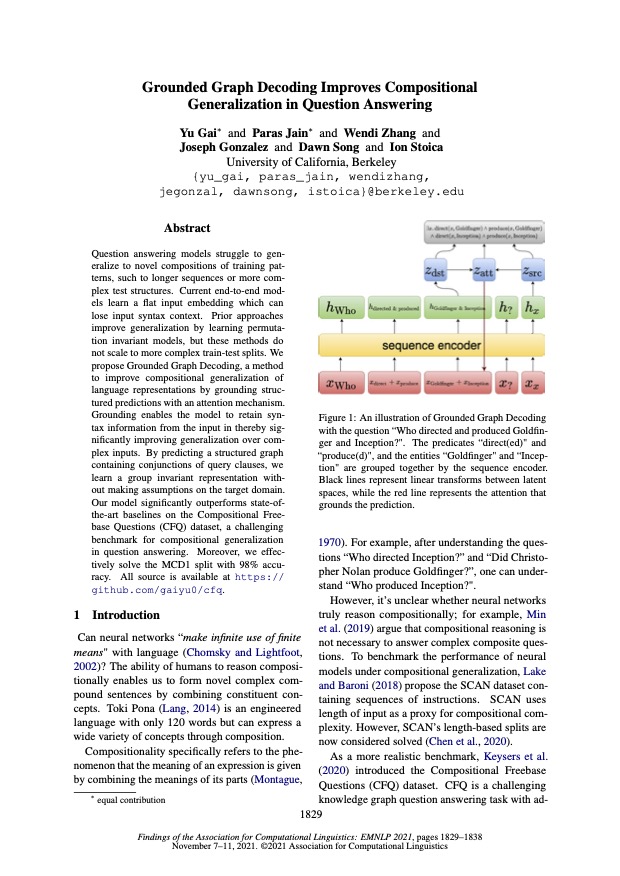

Grounded Graph Decoding improves Compositional Generalization in Question Answering.

Yu Gai; Paras Jain; Wendi Zhang; Joseph E. Gonzalez; Ion Stoica; and Dawn Song.

In

Findings of EMNLP 2021, 2021.

link

bibtex

@inproceedings{gai2021grounded,

title = {Grounded Graph Decoding improves Compositional Generalization in Question Answering},

author = {Gai, Yu and Jain, Paras and Zhang, Wendi and Gonzalez, Joseph E. and Stoica, Ion and Song, Dawn},

booktitle = {Findings of EMNLP 2021},

year = {2021}

}

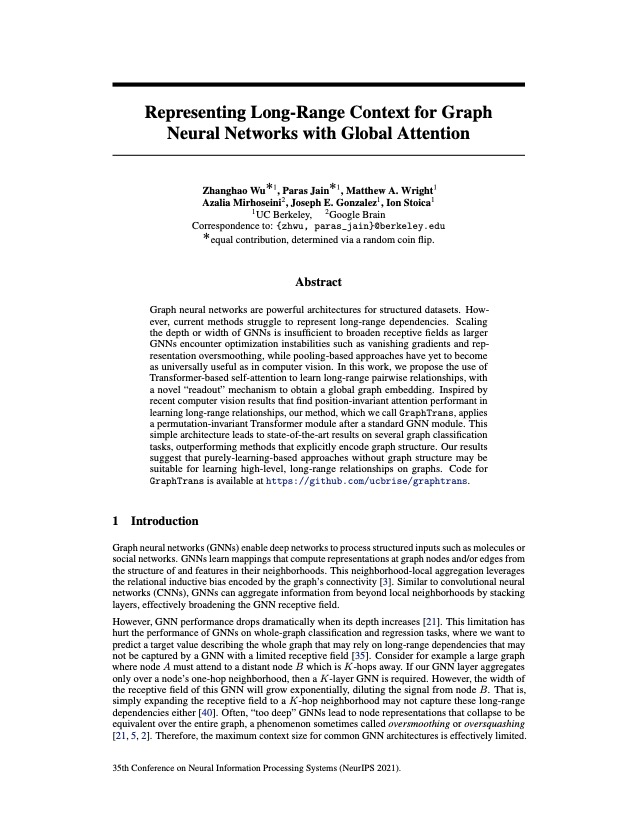

Representing Long-Range Context for Graph Neural Networks with Global Attention.

Zhanghao Wu; Paras Jain; Matthew Wright; Azalia Mirhoseini; Joseph E. Gonzalez; and Ion Stoica.

In

NeurIPS 2021, 2021.

link

bibtex

@inproceedings{wu2021representing,

title = {Representing Long-Range Context for Graph Neural Networks with Global Attention},

author = {Wu, Zhanghao and Jain, Paras and Wright, Matthew and Mirhoseini, Azalia and Gonzalez, Joseph E. and Stoica, Ion},

booktitle = {NeurIPS 2021},

year = {2021}

}